Time to improve on how we handle our logs?

How log management is undertaken for many hasn’t progressed in approach for more than twenty years. At the same time, we’ve seen improvements in storing and searching semi-structured data. These improvements allow us to have better analytical processes that can be applied to log content once aggregated. I believe we’re often missing some great opportunities with how we handle the logs between their creation and putting them into some store.

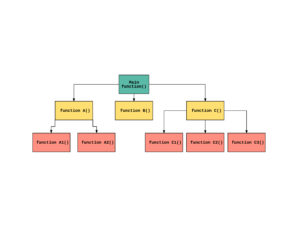

This illustrates how more traditional non-microservice thinking with logging and analytics is.

Yes, Grafana, Prometheus, and observability have come along, but their adoption has focused more on tracing and metrics, not extracting value from general logging. In addition, adopting these tools has been focussed on the container-based (micro)service ecosystems. Likewise, the ideas of Google’s Four Golden Signals emphasize metrics. Yet vast amounts of existing production software (often legacy in nature) are geared towards generating logs and aren’t necessarily running in containerized environments.

The opportunities I believe we’re overlooking relate to the ability to examine logs as they’re created to spot the warning signs of bigger issues or at least be able to get remediation processes going the moment things start to go wrong. Put simply, becoming rapidly reactive, if not becoming pre-emptive, in problem management. But before we delve more into why and how we can do this, let’s take stock of what the 12 Factor Apps document says about this.

When the 12 Factor App principles were written, they addressed some guidelines for logs. The seeds of potential with Logs were hinted at but weren’t elaborated upon. In some respects, the same document also influences thinking in the direction of the traditional approach of gathering, storing, and analyzing logs retrospectively. The 12 Factor App statement about logging has, I think, a couple of key points, both right and, I’d argue if taken literally wrong. These are:

- logs are streams of events

- we should send logs to stdout’ and let the infrastructure sort out handling the logs

- The description of how logs are handled either reviewed as they go to stdout or examined in a database such as OpenSearch using tools such as Fluentd.

We’ll return to these points in a moment, but we need to be mindful of how microservices development practices move the possibilities of log handling. Development here has driven the development and adoption of the idea of tracing. Tracing works by associating with an event a unique Id. As that unique Id flows through the different services. The end-to-end execution could be described as a transaction, which then when may make use of new ‘transactions’ (literal in terms of database persistence’ or conceptual in terms of the scope of functionality. Either way, these sub-transactions will also get their trace Id linked to the parent trace Id (sometimes called a context). These transactions of more called spans and sub-spans. The span information is typically carried with the HTTP header as the execution traverses through the services (but there are techniques) for carrying the information using asynchronous communications such as Kafka. With the trace Ids, we can then associate log entries. All of this can be supported with frameworks such as Zipkin and OpenTracing. What is more forward-thinking is OpenTelemetry which is working towards providing an implementation and industry stand specification, which brings the ideas of OpenCensus (an effort to standardize metrics), OpenTracing, and the ideas of log management from Fluentd.

OpenTelemetry’s efforts to bring together the three axes of solution observability hopefully create some consistency and maximize the opportunities of making it easier to link behaviors shown through the visualized metrics easier to link with traces and logs that describe what software is doing. While OpenTelemetry is under the stewardship of the CNCF, we should not assume it can’t be adopted outside of cloud-native/containerized solutions. OpenTelemetry addresses issues seen with software that have disturbed characteristics. Even traditional monolithic applications with a separate database have distributed characteristics.

The 12 Factor App and why should we be looking for evolution?

The reason for looking for evolution is mentioned briefly in the 12 Factor App. Logs represent a stream of events. Each event is typically constructed from some semi of fully-structured data (either general descriptive text and/or structured content reflecting the data values being processed). Every event has some universal characteristics, as a minimum, a timestamp. Ideally, the event has other metadata to help, such as the application runtime, thread, code path, server, etc. If logs are a stream of events, then why not bring the ideas from stream analytics to the equation, particularly that we can perform analytical processes and decisions as events occur? The technologies and ideas around stream processing and stream analytics have evolved, particularly in the last 5-10 years. So why not exploit them better as we pass the stream of logs to our longer-term store?

Evaluating log events when they are still streaming through our software environment means we stand a chance of observing warning signs of a problem and enabling actions to be applied before the warning signs become a problem. Prevention is better than a cure. The cost of prevention is far lower than the cost of the cure. The problem is that we perceive preventative actions as expensive as the investment may never have a return. Put another way, are we trying to prevent something that we don’t believe will ever happen? Humans are predisposed to risk-taking and assuming that things won’t happen.

If we consider the fact that compute power continues to accelerate, and with it, our ability to crunch through more data in a shorter period. This means that when something goes wrong, a lot more disruption can occur before we intervene when we don’t work on a proactive model. To use an analogy, if our compute power is a car and the volume and value of the data are related to the car’s value. If our car could travel at 30mph ten years ago, crashing into a brick wall would be painful and messy, and repairing the car is going to cost and take time – not great, but unlikely to put us out of business. Now it can do 300mph; hitting the same wall will be catastrophic and fatal. Not to mention whoever had to clear up the fallout has got to replace the car, the impact with have destroyed the wall, and the energy involved would mean debris flung for 100s of meters – so much more cost and effort it could now put us out of business.

Take the analogy further; car manufacturers recognize accidents as much as we try to prevent them with legislation on speed, enforcement with cameras, and contractual restrictions with car insurance such as classes excluding racing, etc., accidents still happen. So, we try to mitigate or prevent them with better braking with ABS. Vehicle proximity and lane drift alarms. We are mitigating the severity of the impact through crumple zones, airbags, and even seat belts and their pretensions. In our world of data, we also have legislation and contracts, and accidents still happen. But we haven’t moved on much with our efforts to prevent or mitigate.

Compute power has had secondary indirect impacts as well. As we can process more data, we can gather more data to do more things. As a result, there can be more consequences when things go wrong, particularly regarding data breaches. Back to our analogy, we’re now crashing hypercars.

One reaction to the higher risks and impacts of accidents with cars or data is often more legislation and compliance demands on handling data. It is easy to accept more legislation – as it impacts everyone. But that impact is not consistent. It would be easy to look at logs and say they aren’t impacted. It’s the noise we must have as part of processing data. How often, when developing and debugging code, do we log the data we’re handling – it’s common from my experience, and in non-production environments, so what? Our data is synthetic, so even if the data was sensitive in nature logging, it isn’t going to harm. But along, suddenly, something starts going wrong in production; a quick way to try to understand what is happening is to turn up our logging. Suddenly, we’ve got sensitive data in our logs which we’ve always treated as not needing secure treatment.

Returning to the 12 Factor App and its recommendation on the use of stdout. The underlying goal is to minimize the amount of work our application has to perform regarding log management. It is correct that we should not burden our application with unnecessary logic. But resorting simply to stdout creates a few issues. Firstly, we can’t tune our logging to reflect whether we’re debugging, testing, or operating in production without introducing our own switches in the code. Something that becomes implicitly handled by most logging frameworks for us. More code means more chances of bugs. Particularly when code has not been subject to extended and repeated use as a shared library. In addition to elevated bug risk, the chances of sensitive data being logged also go up, as we’re more likely to leave stdout log messages than remove them. If the potential for logs goes up for production, so does the chance of it including sensitive data.

Firstly if we avoid the literal interpretation of the 12 Factor App of using stdout but look more at from the idea that our application logic should not be burdened with code for log management but utilizing a standard framework to sort that out, then we can keep our logic free of reams of code sorting out the mundane tasks. At the same time, maximizing consistency and log structure then, our tools can easily be configured to watch the stream as it passes the events to the right place(s). If we can identify semi or fully-structured log events, it becomes easy to raise the flag immediately that something is wrong.

The next issue is that stdout involves our I/O and more compute cycles. I’ve already made the point about ever-increasing compute performance. But performance investment in non-functional spaces always draws concerns, and we’re still chasing the performance issues to keep solution costs down.

We can see this with the effort to make containers start faster and tighten footprints of interpreted and byte code languages with things like GraalVM and Quarkus generating hardware-specific native binaries. Not only that, I pointed to the fact that to get value from logs, we need to have meaning. What is worse, a small element of logging logic in our applications so we can efficiently hand off logs and the receiver has an implicit or explicit understanding of the structure, or we have to run more logic to derive meaning from the log entries from scratch, using more compute effort, more logic, and more error-prone? It is correct that the main application should not be subject to performance issues that a logging mechanism might have and any back pressure impacting the application. But the compromises should never be to introduce greater data risks. To my mind using a logging framework to pass the log events off to another application is an acceptable cost (as long as we don’t stuff the logging framework with rafts of complex rules duplicating logs to different outputs etc.).

If we accept the question –isn’t it time to make some changes to up the game with our use of logging, then what is the answer?

What’s the answer?

The immediate reaction to this is to look at the latest, most innovative thinking in the operational monitoring space, such as AI Ops – the idea of AI detecting and driving problem resolution autonomously. For those of us who are fortunate to work for an organization that embraces the latest and greatest and isn’t afraid of the risks and challenges of working on the bleeding edge – that’s fantastic. But you fortunate souls are the minority. Many organizations are not built for the risks and costs of that approach; to be honest, only some developers will be comfortable with such demands. The worst that can happen here is that the conversation to try to improve things gets shut down and can’t be re-examined.

We should consider a log event life more like this:

This view shows a more forward-thinking approach. ~While it looks complex, using tools like Fluentd means it is relatively easy to achieve. The complex parts are finding the patterns and correlations indicative of a problem before it occurs.

Returning to the 12 Factor App again. Its recommendation for using services like Fluentd and thinking of logging as a stream can take us to a more pragmatic place. Fluentd (and other tools) are more than just automated text shovels taking logs from one place and chucking it into a big black hole of a repository.

With tools like Fluentd, we can stream the events away from the ‘frontline’ compute and process the events with filters, route events to analytics tools and modern user interfaces or even trigger APIs that could execute auto-remediation for simple issues such as predefined archiving actions to move or compact data. At the simplest – a mature organization will develop and maintain a catalog of application error codes. That catalog will reflect likely problem causes and remediation steps. If an organization has got that far, there will be an understanding of which codes are critical and which need attention, but the system won’t crash in the next 5 minutes. If that information is known, it is a simple step to incorporate into an event stream processing the checks for those critical error codes and, when detected, use an efficient alerting mechanism. The next potential step would be to look for patterns of issues that together indicate something serious. Tools like Fluentd are not sophisticated real-time analytics engines. But in terms of simplicity, turning specific logs events into signals that can be processed with Prometheus can handle, and without introducing any heavy data science, we have the potential to handle situations such as how many times do we get a particular warning? Intermittent warnings may not be an issue as the application or another service could sort the issue out as part of standard housekeeping, but if they come frequently, then intervention may be needed.

Using tools like Fluentd won’t preclude the use of the slower bulk analytics processing, and as Fluentd integrates with such tools, we can keep those processes going and introduce more responsive answers.

We have seen a lot of advancement with AI. A subject that has been discussed as delivering potential value since the 80s. But in the last half-decade, we’ve seen changes that have meant AI can help in the mainstream. While we have seen mentions of AIOps in the press –. AI can help in very straightforward, practical means of extracting and processing written language (logs are, after all, written messages from the developer). The associated machine learning helps us build models to find patterns of events that can be identified as significant markers of something important, like a system failure. AIOps may be the major long-term evolution, but for the mainstream organization – that is still a long way downstream, but simple use cases for detecting the outlier events (supported by services such as Oracle Anomaly Detection) aren’t too technically challenging, and using AI’s language processing to help better process the text of log messages.

Finally, the nature of tools like Fluentd means we don’t have to implement everything from the outset. It is straightforward to progressively extend the configuration and continuously refine and improve what is being done, all of which can be achieved without adversely impacting our applications. Our previous diagram helps indicate a path that could reflect progressive/iterative improvement.

Conclusion

I hope this has given pause for thought and highlighted the risks of the status quo, and things could advance.