Online Learning

Machine learning and AI are concepts that are ever-growing and are continually being integrated into the workloads of more enterprises. If you are in the software field, you probably have at least an idea on how machine learning actually happens – a certain model is trained on the training data, perhaps with cross-validation. The trained model is then measured in effectiveness using the test data. If the model is sufficiently successful on the metric of your choice, then it gets deployed to be used for any new, unseen data for future predictions.

This process, well-known to many, is actually offline or batch machine learning. In offline learning, certain assumptions are made: it is assumed that there exist relevant and reasonably-sized data that the model can be trained on, and more importantly, the future data on which the predictions will be performed are sampled from the same distribution as the training data. This is also known as the independent and identically distributed random variables (i.i.d.) assumption.

Online machine learning versus batch learning. (a) Batch machine learning workflow; (b) Online machine learning workflow, from Zheng et.al. Effective Information Extraction Framework for Heterogeneous Clinical Reports Using Online Machine Learning and Controlled Vocabularies

These assumptions can be valid for practical settings. When analyzing the works of an author, it might be assumed that the author has a consistent writing style, and all their work inherits, in some way, this style. When building a machine learning model to predict whether a given text can be from this particular author, it can be valid to hold to the i.i.d. assumption, and train a large model on the works of the author.

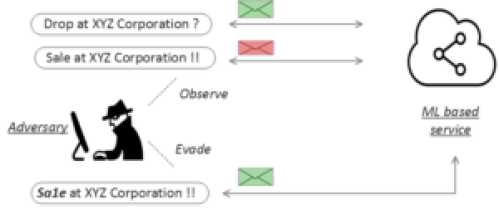

However, i.i.d. assumption does not always hold, and is not always practical. A classic example is adversarial spam filtering.

Spam Email Filtering

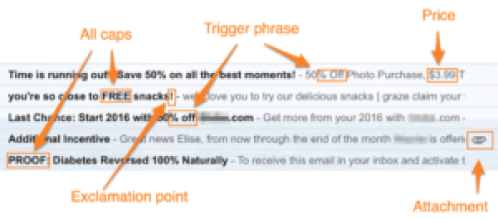

One basic example where the i.i.d. assumption doesn’t hold comes in the form of an adversarially adaptive setting: spam filtering. This is a binary classification problem, where each data point, e.g. an email, is classified either as ‘spam’ or ‘not spam’.

Consider the following scenario: we have a machine learning model for spam filtering, that has been trained on a large data set, and achieves 95% accuracy. Since this accuracy is sufficiently well, the model is deployed to production. However, in time, the model’s strength goes down, and it lets spam email get through. Why?

Because people that generate spam email are playing it smart – when they notice that their emails are not being opened, or the ads are not being clicked, they tweak their content to fool the model we deployed. The future data is no longer sampled from the same distribution that we trained the model on!

This is an adversarial setting – the spammers are adversaries to our model. What is the solution? For this simple example, the solution can also be simple: the developer can manually re-train the model again as new data come in, and with a clever choice of loss function and success metric, they can put greater weight on the most recent data. Another option is to automate this process, and have the model re-trained in defined intervals, e.g. once every week, and then automatically deployed. This way, the overall performance stays relatively stable.

For the straightforward example of email spam filtering, offline learning with automated re-training can work well. The worst that can happen is, the adversaries play it cunningly and right after a model deployment, they start sending smarter spam. Some spam emails manage to get through the model, landing on the user’s inbox, until the next training phase where these new spam emails will be known to be spam.

Illustration of exploratory attacks on a machine learning based spam filtering system,

A natural follow-up is to circumvent this problem is shortening the time intervals between training the model. The shorter the interval, the less tweaking time the adversaries will have. When taken to the extreme, this approach converges to real-time updates to the model, as time intervals approach virtually 0. This practice then becomes known as ‘online learning’.

Online Learning

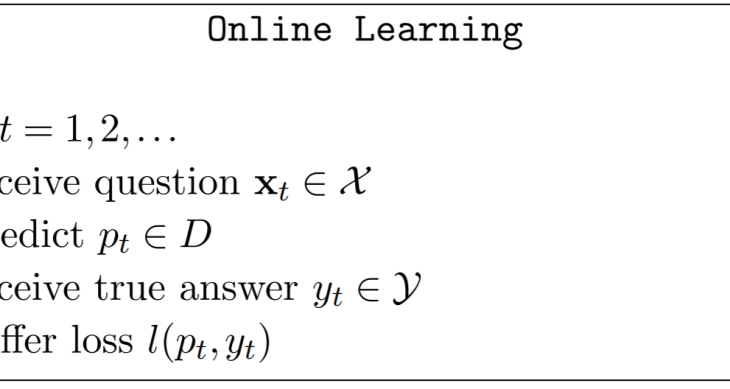

Formally, online learning is the process of answering a sequence of questions given knowledge of the correct answer to previous questions. Opposed to the classic offline machine learning, where all data points are available at training time, in online learning, data points come in sequences – we are only exposed to one data point at a time.

Online learning is performed in consecutive rounds denoted by t, where in each round the learner receives a question xt, and subsequently provides an answer pt. When the true answer is received, the learner suffers a loss, and the learner can update the model accordingly.

The notion of risk and loss is inherently different between offline and online learning. In offline learning, the risk is the expected loss over all the data points, since the data points are not sequential. In the online setting, data are seen as a sequence: the risk of the model changes with each new observation. At any point in time, T, the risk is

where pt and yt are as described above. The overall goal is to minimize regret, a different notion of cumulative loss. Regret is defined as

What this means is that the regret of an algorithm is the difference between the risk of the given algorithm and the risk of the algorithm that can achieve the minimum risk in hindsight. This formulation makes intuitive sense: the data is coming in discrete points, so we cannot make a holistic guess. Our model can be judged by the past predictions, and we can only regret the actions that we have not taken.

An intuitive example to this online learning setting is very well-known in the neural network area: stochastic or online gradient descent. Typically used during the optimization step of neural networks, gradient descent is an algorithm that aims to minimize a target function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient.

Online gradient descent executes gradient descent by only taking a single sample, i.e. a batch size of 1, for each iteration. After obtaining a loss for this single example, the parameters, i.e. weights in neural networks, are updated according to the negative gradient of this loss. The similarity between the general pseudocode description of online learning and the algorithm for online gradient descent is apparent.

[From the slides of Wojciech Kotlowski, Institute of Computing Science, Poznań University of Technology]

When is online learning useful?

One of the main settings that require an online learning solution are when the data are too big to train a static offline model efficiently. Out-of-core training, where the data set does not wholly fit into the main memory, is not feasible in environments with limited resources. Then, the data can be treated as an incoming stream, and processed in an online fashion. Treating each data point as a new observation and iteratively tuning the learning model makes this possible. As can be seen from this setting, online learning does not always have to be ‘online’ in the common sense. Most of the current offline learning algorithms actually deploy online learning in the optimization step, e.g. online gradient descent.

Another setting where online learning is useful is when the underlying distribution of the data drifts over time. This occurrence is also known as concept drift. The changes in the distribution of the data can be attributed to numerous causes, one of the most prominent case being the adversarial setting like spam filtering. Other causes can be changes in population, changes in personal interests, or the complex nature of the environment.

Illustration of the four structural types of concept drift,

from Indrė Žliobaitė, Learning under Concept Drift: an Overview

Concept drift happens in various real-life applications. Žliobaitė divides them into four categories: monitoring control, personal assistance, decision making, and artificial intelligence. Some examples include fraudulent transaction detection in banking, customer profiling and dividing customers into non-stationary segments, forecasting in finance, and stock price prediction.

In Practice

Algorithms for online machine learning on streaming data is an active research area. Machine learning is a very broad area, and online machine learning can be integrated into existing paradigms, such as building online deep learning models.

Currently, there are numerous open source approaches to building machine learning models for online settings:

- MOA (Massive Online Analysis): A framework for data stream mining, written in Java. Developed at The University of Waikato, where popular machine learning framework WEKA was also developed, MOA is one of the most popular open-source tools for training machine learning models on streaming data. MOA currently supports stream classification, stream clustering, outlier detection, change detection and concept drift and recommender systems.

- scikit-multiflow: Inspired by MOA, scikit-multiflow is an open source machine learning framework for multi-output/multi-label and stream data, developed for Python.

- streamDM: Developed at Huawei Noah’s Ark Lab, streamDM is an open-source tool for mining big data streams using Spark Streaming.

Another approach is through scikit-learn. Numerous classifiers from scikit-learn’s model classes support a function called partial_fit(). Partial fit performs one iteration of optimization on the given samples, which in the online learning case can be a single sample. This method is used mainly for out-of-core training, where the whole training dataset cannot fit into memory.

In production, there are a variety of other aspects to consider: setting up a streaming platform for digesting incoming streams, such as Kafka or Spark, building pipelines to preprocess the data and perform feature extraction, setting up monitoring on the prediction process, and many more.

Hidden technical debt in machine learning systems: https://papers.nips.cc/paper/5656-hidden-technical-debt-in-machine-learning-systems.pdf

If deployed in an online setting, the models that have been built have to be fast to make predictions in real time and to update the model in real time, and they need to be available at all times. The task becomes especially complicated, since the model cannot be efficiently horizontally scaled – any incoming data point has to pass through a single model, and the model has to update accordingly. In a distributed scale, this would require efficient passing of parameters between models deployed in separate machines. Since the order of the data points matter in the model updates, this distributed setting becomes problematic.

Even though it’s being repeated everywhere, it’s nevertheless true that machine learning is growing bigger and is becoming integrated with more areas each year. There is exciting research going on in finding new paradigms within the machine learning sphere, and new frameworks and tools bring these cutting-edge machine learning concepts even closer to developers. In this rising trend within machine learning, with the growth of personalization in the online sphere, such as online customer behavior prediction and online ranking, and with the recent development in IoT, online machine learning will also start becoming more prominent.