Chinese Open Source Software

The major Chinese cloud providers have all adopted Kubernetes, the open source container orchestration system. The biggest publicly sized Kubernetes cluster in the world runs at JD.com, the Chinese e-commerce giant. Just like American tech companies, the Chinese know that open source contribution is an investment that pays large dividends.

New open source projects are emerging from China’s thriving tech industry.

In the Kubernetes ecosystem, three major projects started in China have been incubated by the Cloud Native Computing Foundation (CNCF): Harbor, TiKV, and Dragonfly. These projects are the beginning of what will likely be a large number of Chinese open source projects to be released in the near future

The CNCF has played a pivotal role in giving American cloud providers a commons to find mutual agreement within. Now all of the major Chinese cloud providers have joined the CNCF as well, showing an intent to adopt and contribute to open source software.

The CNCF is a division of the Linux foundation devoted to supporting Kubernetes and other “cloud native” technologies. The CNCF offers a neutral ground for competing players in the cloud ecosystem to find common ground. Thanks to the CNCF, efforts such as Certified Kubernetes and the Cloud Native Landscape make it easier for enterprises to adopt Kubernetes.

The rapid adoption of Kubernetes is leading to a boom in new infrastructure companies and open source projects. It’s difficult to overstate the impact that Kubernetes is having. Kubernetes has already reshaped the competitive dynamics of cloud providers by providing a common tier for scheduling and resource management of server resources.

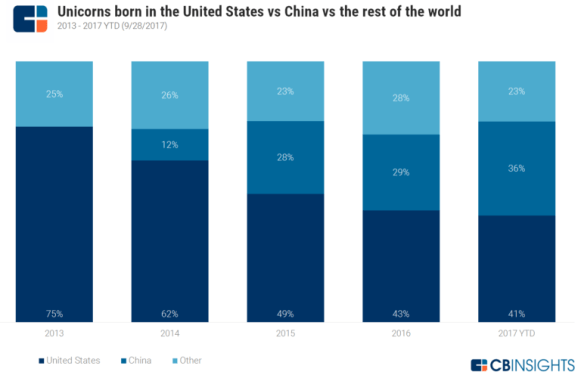

But what we have seen so far in the Kubernetes ecosystem might pale in comparison to what we will see if the Chinese fervor for open source engineering is anything like its fervor for company building.

Engineers in China are like engineers everywhere. They like to build software. They don’t like redundant work. They like to take advantage of economies of scale. They see the value of cooperation between companies.

Kai Fu Lee documented the symbiotic relationship between the Chinese and American machine learning communities in his book AI Superpowers. The international dynamics of the Kubernetes ecosystem are likely to be even more positive sum–and they are likely to take effect even faster, given that enterprises are ready to adopt Kubernetes, and they are very willing to pay money for it.

From banks to telecoms to insurance companies: every giant enterprise in the world wants to modernize their infrastructure and move faster. These enterprises want to buy Kubernetes, and the cloud providers and service integrators want to sell it to them.

With so much money being spent by customers, the cloud providers are all happy to work together on improving the core infrastructure around Kubernetes. This includes the Chinese cloud providers.

A cloud provider should contribute to Kubernetes because the contribution process will make them more intimately familiar with the direction the ecosystem is heading in. When a company becomes a sponsor of the CNCF, they become privy to the latest internal developments of cloud native technology.

There is also a simple halo effect that comes from being a steward of an open source project that you get value from.

Kubernetes has created stronger incentives to contribute to open source than ever before. The governance of the CNCF is enabling this ecosystem to thrive with healthy guardrails and processes such as the CNCF Sandbox.

Now that we understand why Chinese tech companies are starting to contribute to open source, let’s look at the first three major projects to come out of China.

Dragonfly: P2P Container Image Distribution

A container image describes the complete and executable version of an application. These container images are stored in a container registry. When a new instance of a container image needs to be spun up, that image must be loaded from the registry and scheduled onto a Kubernetes host.

In a large Kubernetes cluster, containers are being spun up and spun down all the time. Servers are dying, new applications are being launched, and existing applications are being scaled due to increased load. This dynamic environment puts significant load on the container image networking infrastructure.

Container images can be quite large, both hogging up bandwidth and creating problems when there are node failures or network problems. Ideally, we would want to continue from where the downloading process left off. This saves precious time and bandwidth.

With these considerations, how is file and image distribution handled within Alibaba Cloud?

Alibaba Cloud is the AWS of China. It’s the biggest cloud provider in the Mainland China, and has 19 regions around the world. They need to serve millions of people fast and reliable services, especially on peak periods such as Double 11, China’s biggest shopping day, comparable to Black Friday or Cyber Monday in the US.

Operating on such a big scale, Alibaba took the matter into their own hands. Instead of the traditional tools using wget, curl, or ftp, Alibaba decided to build their own file distribution tool, and apply it to the scenario of image distribution. The result is Dragonfly, a peer-to-peer file distribution system.

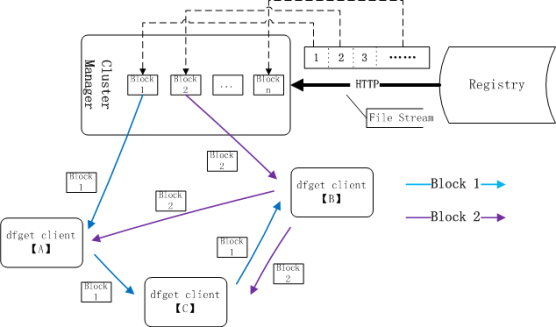

How does it work? In Dragonfly architecture, a P2P network involves two types of nodes: cluster manager, also called a super-node, and the hosts, also called the peers. These hosts are dfget terminals.

When a peer requires an image, cluster manager checks if the image already exists within the disk in the current environment. If not, the requested image is pulled from the registry.

The image file is sharded, and download over multiple threads. This speeds up the process, and with the metadata that is kept during the downloading process, makes resuming downloading easier.

As the cluster manager is downloading the shards, it makes these available to the hosts as well. As soon as a host downloads a shard, it becomes available to the host’s peers. When a host has downloaded all the shards, the download is finished.

This architecture results in a dramatic speed-up, and enables downloading large images with ease. When previously requesting too many images created a network bottleneck at the registry, with the peer-to-peer distributed system, a consistent serve time can be reached. Dragonfly allows thousands of parallel requests, and completes each download in 12 seconds on average.

Dragonfly improves on its base architecture with additional features such as smart scheduling and smart compression. Image files are compressed before being distributed, increasing speed and reducing bandwidth usage.

What about design decisions other than speed?

Dragonfly supports numerous container technologies, such as Alibaba’s container Pouch, and Docker. It does not require any change in how container services are coded.

In P2P networks, security is a concern, since file distribution can now be done within any two peers. Dragonfly already encrypts files during transmission, and the roadmap shows the further steps to be taken.

Being accepted into CNCF, Dragonfly seems to have a promising future.

Harbor: Container Image Storage

Containers are spun up from container images. These images are held in a registry to make distribution of images easier. Registries allow sharing images, building automated CI pipelines, and creating a secure storage environment for images.

There are different options when it comes to registries: developers can decide to use a ready-to-use service, such as Docker Hub, Amazon ECR, Google Container Registry, or self-host their registries.

Not all organizations can hold their images on the public cloud, however, due to privacy and security requirements.

Harbor is a cloud native image registry that stores, signs, and scans images. It started as a VMWare project in China, and has been accepted as a sandbox project into CNCF. Harbor has a strong focus on security, trust, compliance, and performance.

What does Harbor offer?

Harbor has numerous features that improve upon the concept of a simple storage for container images.

Image Replication

Enterprises can have multiple registries, for different stages of the application. The images must be replicated as they become ready for the next stages in the pipeline.

Images are not deterministic, and the developer might not get a single, consistent image when building the same Dockerfile. Harbor allows direct image replication from the source for distributing images in a consistent way to different registries.

Harbor allows setting rules to trigger replication of images, to automate the process. Initial replication ensures that the image is replicated unchanged. Any further changes to the original image follow an incremental model: only the changes are forwarded to the replica image.

With Harbor, developers can also replicate images globally, and have identical images in multiple sites. This can improve the latency of image pulls and pushes.

Image Security

Harbor deals with image security on two fronts: by enforcing role-based access control on the user front, and with content signing and vulnerability analysis on the container front.

Harbor allows defining roles and providing privileges, such as read/write accesses, based on these roles. This prevents unprivileged users from overwriting images.

With the Notary feature, developers can sign the images that they push to the registry. When another developer is pulling the image, they can make sure that the image pushed to the registry has not been changed. They can verify the signature, and then digest the image.

Harbor uses an open source tool called Clair that leverages external sources to check the vulnerability of the images stored in the registry. This scanning can be done periodically, and based on a threshold, the vulnerability level of images are determined.

These features greatly increase the security capabilities of Harbor, making it convenient to use especially for enterprises that want to self-host their proprietary images.

Designed for a cloud native environment, Harbor can be deployed as a stand-alone registry, or on Kubernetes by using a Helm chart.

TiKV: Distributed Transactional Key-Value Database

Relational databases have dominated the software world for years. When vertical scaling was no longer feasible, however, and distributed systems started rising, NoSQL solutions emerged. In the cloud age, data is almost always held in a distributed environment, with numerous physical nodes.

Stateful systems require storage solutions that fit the needs of the cloud-native environment: scalability, speed, data consistency, fault tolerance. Getting all these aspects rights is a hard task.

As a solution, an exciting project out of China comes in the form of a transactional key-value database that offers geo-replication, horizontal scalability, strong consistency, and consistent distributed transactions.

TiKV is an open source, unifying distributed storage layer. It was originally developed by PingCAP as a storage layer for TiDB, and was then introduced into CNCF as a sandbox project. It brings together NoSQL level scalability abilities with the transactional ACID properties of relational databases. It was inspired by the layered architecture of Google’s Spanner and F1.

TiKV brings together numerous technologies and mechanisms. Its architecture consists of Placement Drivers, Raft Groups, RocksDB as the store, and regions.

TiKV uses the Raft consensus algorithm, enhanced with several optimizations for increased performance, to achieve data consistency during replication of regions into multiple nodes. These regions form a Raft group, allowing redundancy and reliability.

As its underlying storage engine, TiKV uses RocksDB. RocksDB offers fast operations and stability as the underlying engine. On every physical node, TiKV architecture holds two RocksDB instances: one for the data to be stored, and one for the Raft log.

TiKV is especially useful as an underlying storage layer for building more complicated, distributed systems that require scalability, consistency between replicated data, and distributed transactions with ACID properties. Currently there are numerous companies that are using TiKV, and TiKV has been used to build TiDB, TiSpark, Toutiao.com’s metadata service, Tidis, and Ele.me’s protocol layer.

With its strong focus on performance, consistency, and scalability, TiKV is a perfect key-value store solution for cloud-native enterprises. You can try TiKV with TiDB, or try TiKV on its own.

Chinese open source is becoming more mainstream, with these 3 major projects making it into CNCF. With the strong collaboration between all the companies that are backing up these open source projects, especially with the addition of Chinese enterprises, and the contributing developers, the open source scene will continue to thrive.